¶ A/B Testing

¶ What it is

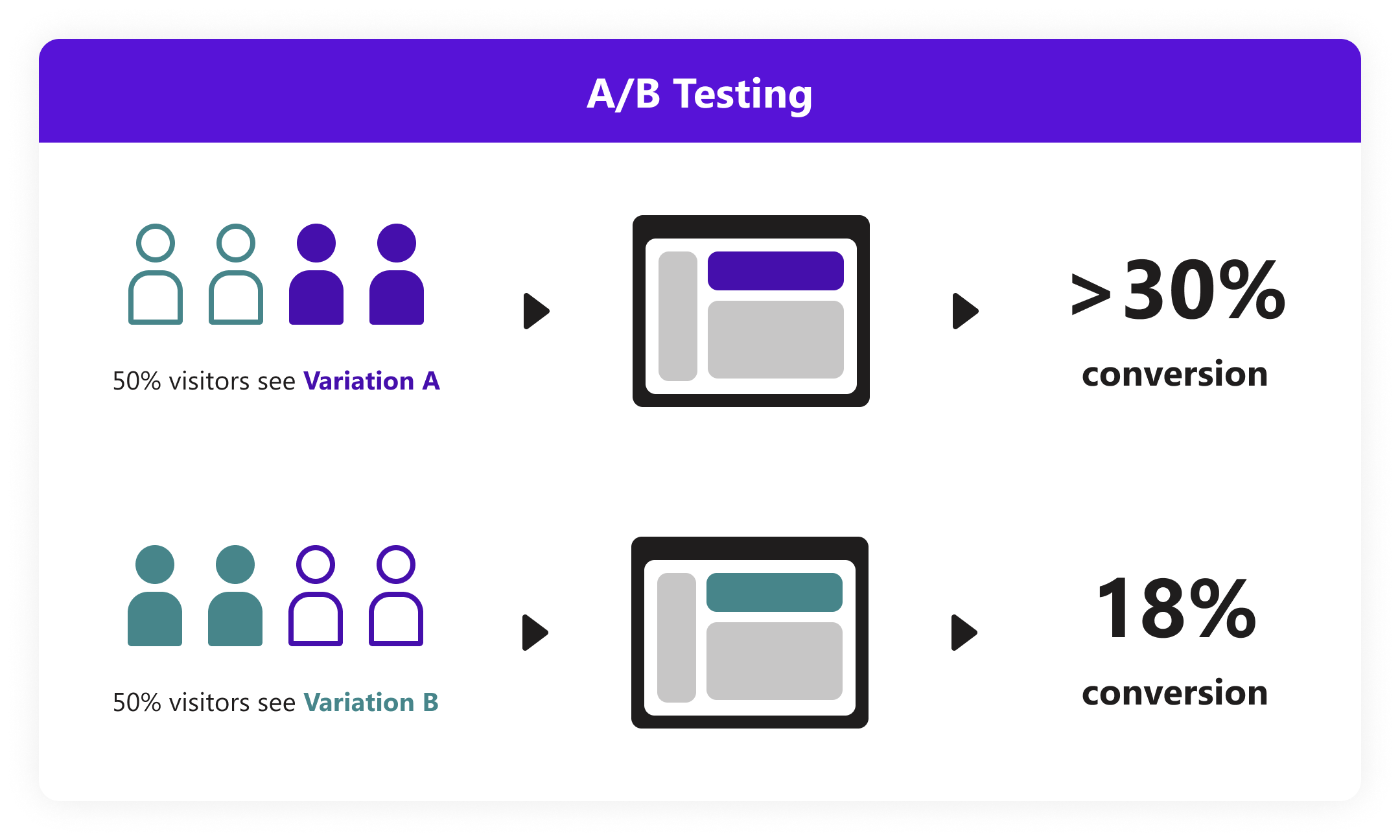

A/B testing is to make two (A/B) or more (A/B/n) versions for an interface or process. In the same time dimension, several visitor groups (target groups) with the same (similar) composition visit these versions randomly, collect user experience and business data of each group, and finally analyse and evaluate the best performance for formal adoption.

¶ Why it is useful

It eliminates the disputes of different opinions in the design of customer experience (UX) and determines the best scheme according to the actual effect. Through A/B testing, you reduce the release risk of new products or features and guarantee predictable product innovation.

¶ When to use it

A/B Testing is most useful once a product has reached the growth stage and beyond. This is when the company has a significant user base that keeps returning to the development and sees fundamental value. At that stage, introducing variations of changes can produce meaningful results.

Refrain from using A/B Testing at the introduction stage, as the product does neither have a significant enough userbase to yield relevant data nor is the product-market fit established, so you can never know whether the results are due to the design idea or the general product concept.

¶ How is it done

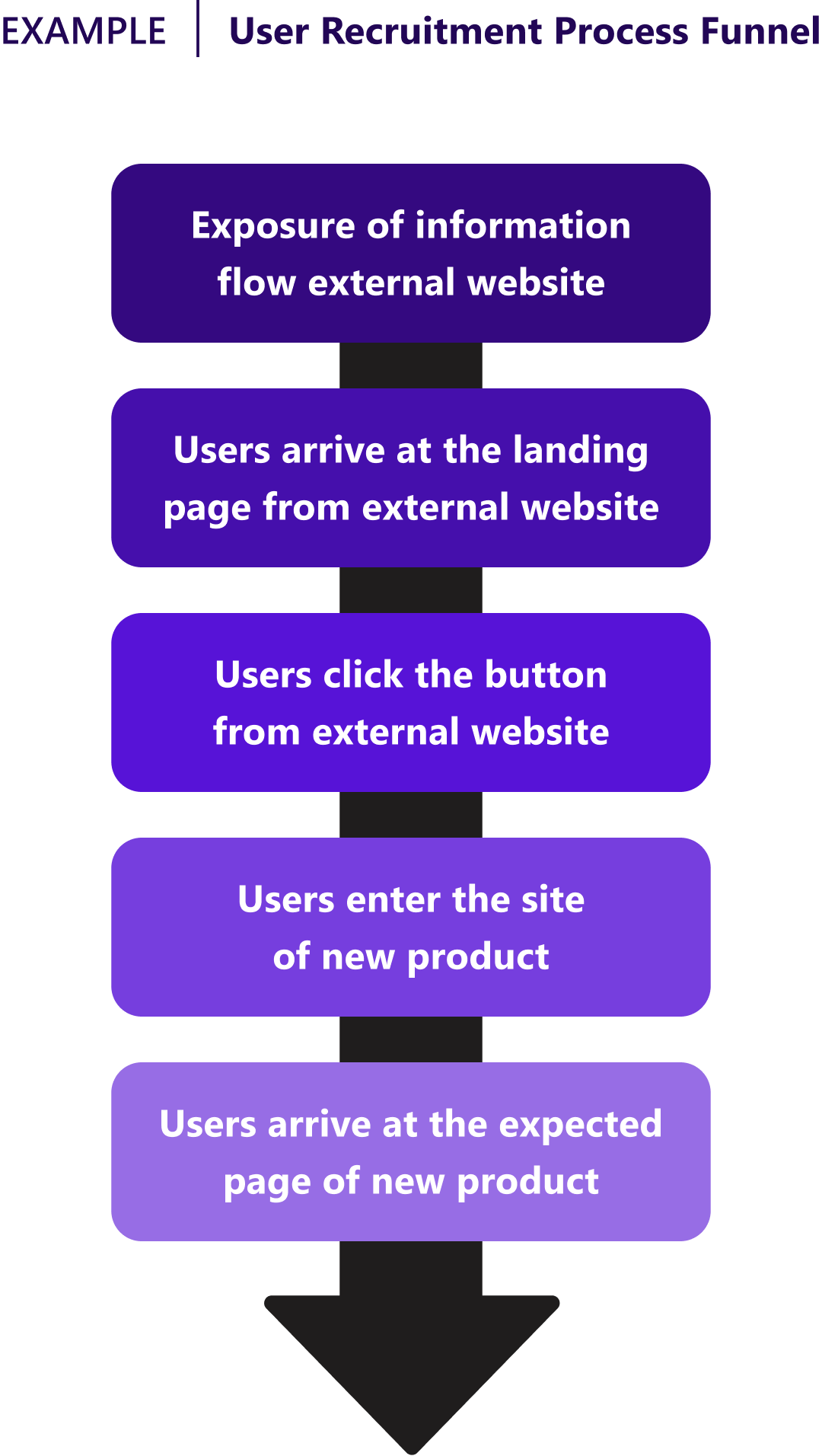

- When facing a problem or idea with multiple valid solutions and no clear way to decide which way to go, consider implementing an A/B test for various options to get accurate data on how it performs in the market.

- Isolate the target question you want to answer and design experiments in different solution forms which target precisely this and only this question.

- Implement the different product versions and ensure that equal cohorts see either version.

- Put the A/B test into production with a limited number of users, giving you statistically significant results.

- Summarise the results by analysing the data and discussing which version has performed how and why. Either iterate further is more tests or take a decision and implement it for all users.

¶ Do's & Don't

Do's

- Isolate the target question and ensure all versions only vary on that aspect. Going on more or different elements will bias your results.

- Ensure that coherent cohorts see each version. Exposing different features to different base personas will bias your results.

Don't

- Sense-check your versions and the outcomes. Sometimes small mistakes can lead to erroneous results and conclusions.

¶ Tools needed

- A/B testing tools can help implement the test and the data collection consistently

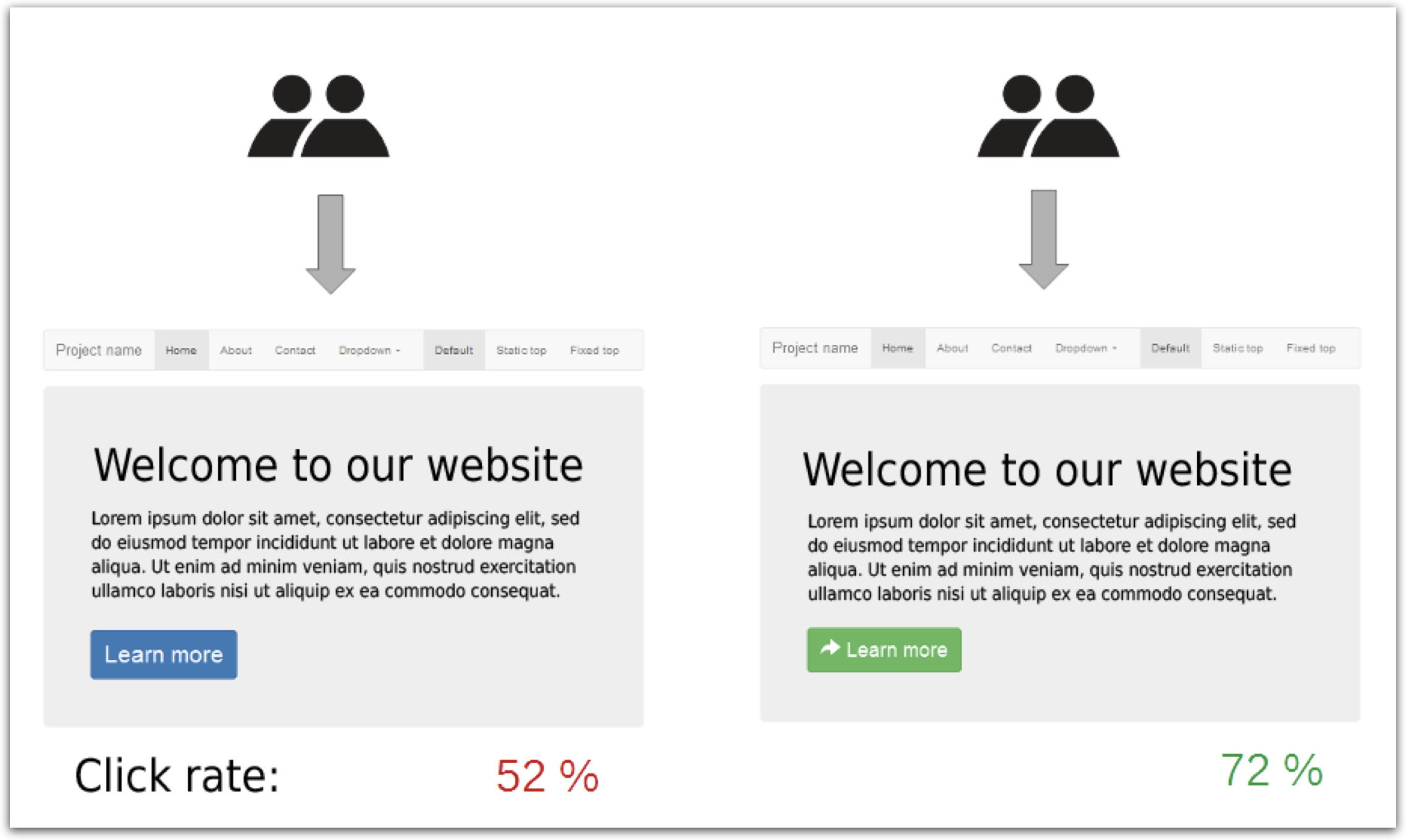

¶ Example